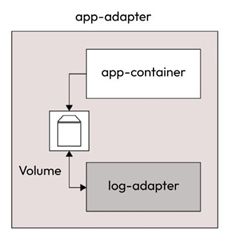

As its name suggests, the adapter pattern helps change something to fit a standard, such as cell phones and laptop adapters, which convert our main power supply into something our devices can digest. A great example of the adapter pattern is transforming log files so that they fit an enterprise standard and feed your log analytics solution:

Figure 5.7 – The adapter pattern

It helps when you have a heterogeneous solution outputting log files in several formats but a single log analytics solution that only accepts messages in a particular format. There are two ways of doing this: changing the code for outputting log files in a standard format or using an adapter container to execute the transformation.

Let’s look at the following scenario to understand it further.

We have an application that continuously outputs log files without a date at the beginning. Our adapter should read the stream of logs and append the timestamp as soon as a logline is generated.

For this, we will use the following pod manifest, app-adapter.yaml:

…

spec:

volumes:

- name: logs

emptyDir: {}

containers:

- name: app-container

image: ubuntu

command: [“/bin/bash”]

args: [“-c”, “while true; do echo ‘This is a log line’ >> /var/log/app.log; sleep 2;done”]

volumeMounts:

- name: logs

mountPath: /var/log

- name: log-adapter

image: ubuntu

command: [“/bin/bash”]

args: [“-c”, “apt update -y && apt install -y moreutils && tail -f /var/log/app.log |

ts ‘[%Y-%m-%d %H:%M:%S]’ > /var/log/out.log”]

volumeMounts:

- name: logs

mountPath: /var/log

The pod contains two containers – the app container, which is a simple Ubuntu container that outputs This is a log line every 2 seconds, and the log adapter, which continuously tails the app. log file, adds a timestamp at the beginning of the line, and sends the resulting output to /var/ log/out.log. Both containers share the /var/log volume, which is mounted as an emptyDir volume on both containers.

Now, let’s apply this manifest using the following command:

$ kubectl apply -f app-adapter.yaml

Let’s wait a while and check whether the pod is running by using the following command:

| $ kubectl get | pod | app-adapter | |||

| NAME | READY | STATUS | RESTARTS | AGE | |

| app-adapter | 2/2 | Running | 0 | 8s | |

As the pod is running, we can now get a shell into the log adapter container by using the following command:

$ kubectl exec -it app-adapter -c log-adapter — bash

When we get into the shell, we can cd into the /var/log directory and list its contents using the following command:

root@app-adapter:/# cd /var/log/ && ls

app.log apt/ dpkg.log out.log

As we can see, we get app.log and out.log as two files. Now, let’s use the cat command to print both of them to see what we get.

First, cat the app.log file using the following command:

root@app-adapter:/var/log# cat app.log

This is a log line

This is a log line

This is a log line

Here, we can see that a series of log lines are being printed.

Now, cat the out.log file to see what we get using the following command:

root@app-adapter:/var/log# cat out.log

[2023-06-18 16:35:25] This is a log line

[2023-06-18 16:35:27] This is a log line

[2023-06-18 16:35:29] This is a log line

Here, we can see timestamps in front of the log line. This means that the adapter pattern is working correctly. You can then export this log file to your log analytics tool.

Summary

We have reached the end of this critical chapter. We’ve covered enough ground to get you started with Kubernetes and understand and appreciate the best practices surrounding it.

We started with Kubernetes and why we need it and then discussed bootstrapping a Kubernetes cluster using Minikube and KinD. Then, we looked at the pod resource and discussed creating and managing pods, troubleshooting them, ensuring your application’s reliability using probes, and multi-container design patterns to appreciate why Kubernetes uses pods in the first place instead of containers.

In the next chapter, we will deep dive into the advanced aspects of Kubernetes by covering controllers, services, ingresses, managing a stateful application, and Kubernetes command-line best practices.