It’s story time! Let’s simplify Kubernetes Services.

Imagine you have a group of friends who love to order food from your restaurant. Instead of delivering each order to their houses separately, you set up a central delivery point in their neighborhood. This delivery point (or hub) is your “service.”

In Kubernetes, a Service is like that central hub. It’s a way for the different parts of your application (such as your website, database, or other things) to talk to each other, even if they’re in separate containers or machines. It gives them easy-to-remember addresses to find each other without getting lost.

The Service resource helps expose Kubernetes workloads to the internal or external world. As we know, pods are ephemeral resources—they can come and go. Every pod is allocated a unique IP address and hostname, but once a pod is gone, the pod’s IP address and hostname change. Consider a scenario where one of your pods wants to interact with another. However, because of its transient nature, you cannot configure a proper endpoint. If you use the IP address or the hostname as the endpoint of a pod and the pod is destroyed, you will no longer be able to connect to it. Therefore, exposing a pod on its own is not a great idea.

Kubernetes provides the Service resource to provide a static IP address to a group of pods. Apart from exposing the pods on a single static IP address, it also provides load balancing of traffic between pods in a round-robin configuration. It helps distribute traffic equally between the pods and is the default method of exposing your workloads.

Service resources are also allocated a static fully qualified domain name (FQDN) (based on the Service name). Therefore, you can use the Service resource FQDN instead of the IP address within your cluster to make your endpoints fail-safe.

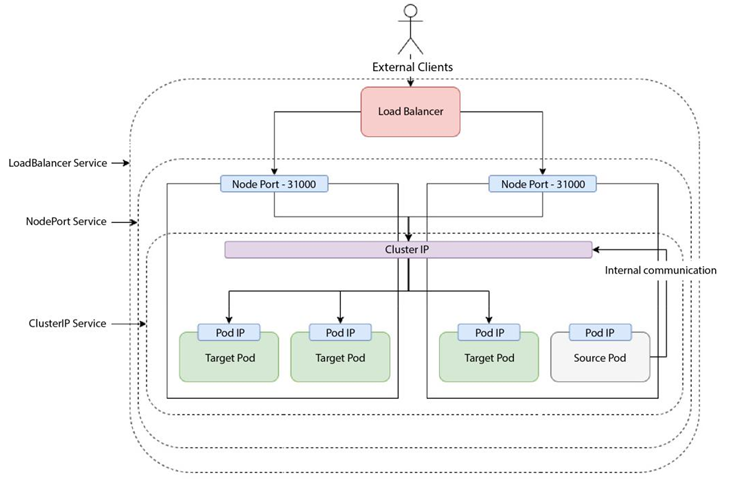

Now, coming back to Service resources, there are multiple Service resource types— ClusterIP,

NodePort, and LoadBalancer, each having its own respective use case:

Figure 6.4 – Kubernetes Services

Let’s understand each of these with the help of examples.